Music and Audio Research Lab: BirdVox Internship

Project Summary

During the summer of 2018, I worked at the New York University Music and Audio Research Lab (M.A.R.L) as a software engineering/research intern on the BirdVox project.

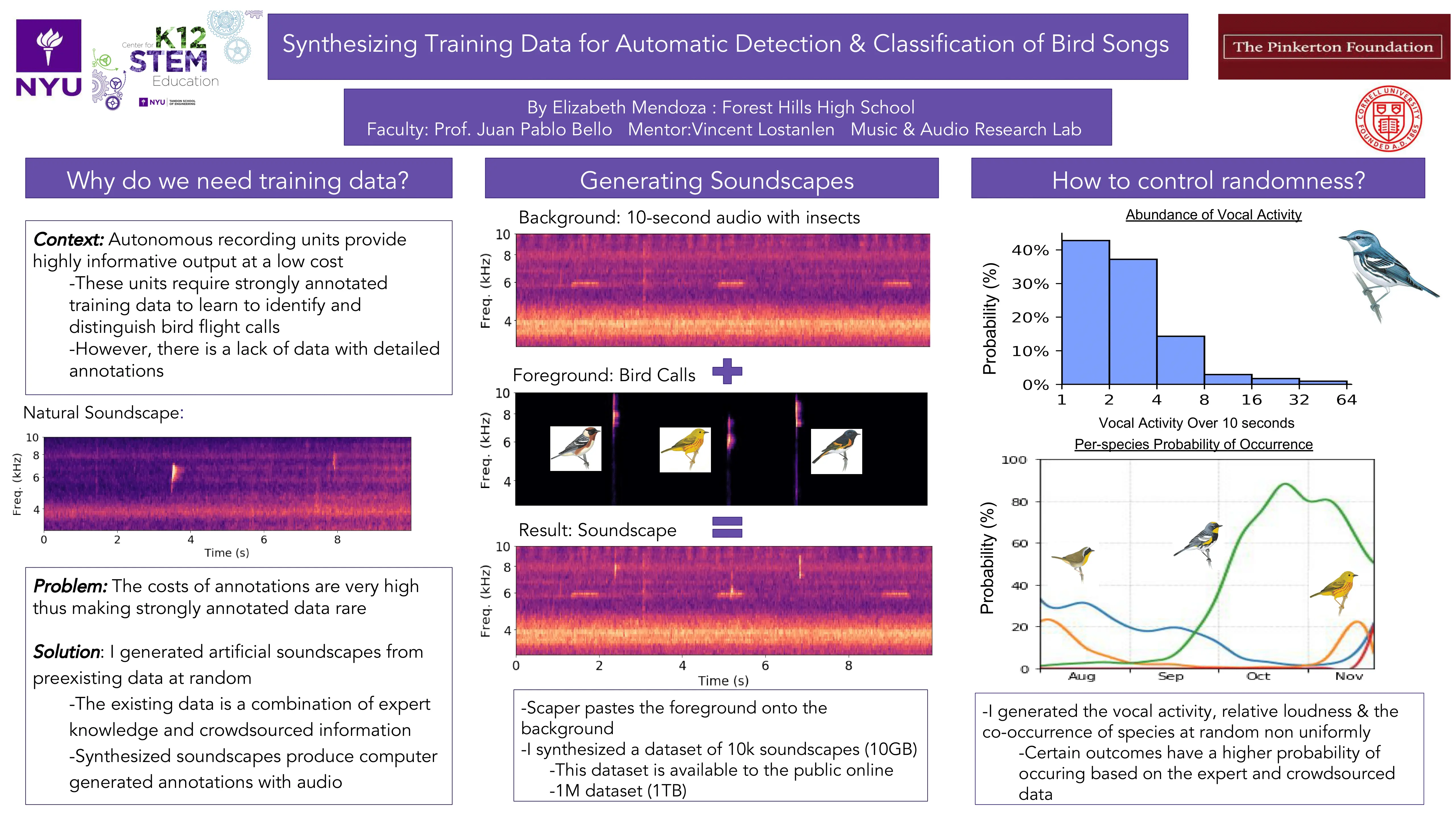

My internship was focused on generating synthetic training data for the classification of free flying bird species by machine learning technology.

My research was then presented at the NEMISIG (North East Music Information Special Interest Group) convention during 2019.

- Skills: Python, Audacity

- Date: July, 2018 - August, 2018

Background

BirdVox is a collaboration between the Cornell Lab of Ornithology and NYU MARL that aims to use machine learning models in order to monitor bird migration patterns.

In theory, the machine learning audio recorders should be able to record snippets of bird calls over a period of months, and be able to sort the species of the bird using AI. However, the annotations needed to test the accuracy of these models were incredibly expensive and time-consuming to produce.

My internship aimed to address this issue by creating synthetic training data that would reflect real world distribution of flight calls among different species, while simultaneously generating annotation sheets to verify the models peformance.

Project Breakdown

In order to synthesize these soundscapes, I mixed natural sounds from various pre-recorded sources. Starting off with the CLO-43SD dataset, which contains 5,428 labeled audio flight calls from 43 different species of North Amercian woodwarblers; I wrote a python script to isolate the specific flight calls which contained little or no background noise and organized them based on species of bird.

This organization allowed me to produce a CSV file that measured the occurrence of specific species, as well as the number of calls they produced on average in each recording in order to better mimic these natural soundscapes.

Then, using BirdVox-DCASE-20k, which is a dataset of 20k recordings in which 50% of the soundscapes contain at least one bird vocalization, I used the script to filter out soundscapes devoid of any bird sounds.

These empty backgrounds were then remixed to include flight calls sampled at random based on the CLO-43SD distribution. The flight calls were even added at various SNR’s in order to test the accuracy of any bioaccoustic models.

Results

Upon completing the python scripts and verifying the distribution of data, I was able to synthesize a large scale dataset known as BirdVox-scaper-10k, featuring over 1 TB’s worth of data.

The BirdVox-scaper-10k dataset contains 9983 artificial soundscapes. Each soundscape lasts exactly ten seconds and contains one or several avian flight calls from up to 30 different species of New World warblers (Parulidae). Alongside each audio file is included an annotation file describing the start time and end time of each flight call in the corresponding soundscape, as well as the species of warbler it belongs to.

This dataset is one of the largest of its kind to come with complete annotations and is published for free use on Zenodo for the testing, training, or deployment of bioacoustic classification models.

Reflection

This internship was my first dive into the world of audio research and it was so useful in teaching me about how vast the applications between computer science and media can be. I was made aware of the various ways audio can be measured, and how to write algorithms that could accurately simulate real world scenarios. It would also be extremely useful in my future internship at MARL that was also focused on machine learning technology.